Halfway

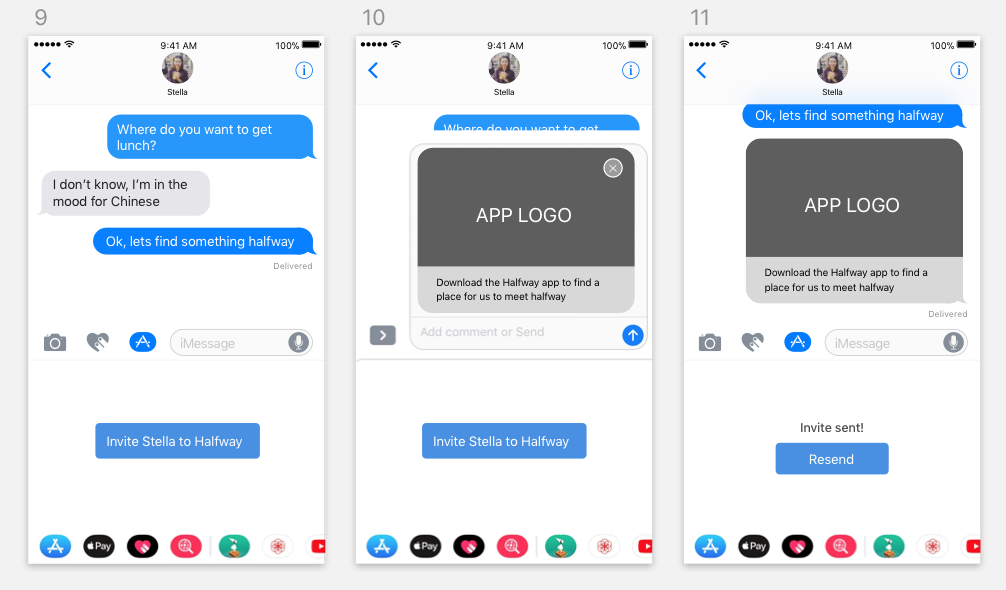

From last week’s feedback, we noticed that the iOS system actually couldn’t read users’ messages. Therefore, we decided to create an app that allows 2 or more people find a place to meet up at a midpoint between them based on their information shared on the Facebook. It picks up personal preferences from your Facebook account as well as your locations to figure out what kind of place you want to meet up.

Insights from user test on 04/05

1. Users care about the price of each place so it is important to show the price range in a conspicuous way. —— “$”,”$$” and “$$$” to indicate low to high price.

2. What if users want to revote —— an “Edit Choices” button after they submit their votes.

3. Users feel confused about the dragging icons. “They look like a burger menu” —— delete the dragging icons and add a text instead.

4. How users know their friends have already made decisions—— Notifications from the app when both decide.

5. How to check the chosen place is at a midpoint——both people’s location will be shown on the map.

6. Users want to know more details about the places.

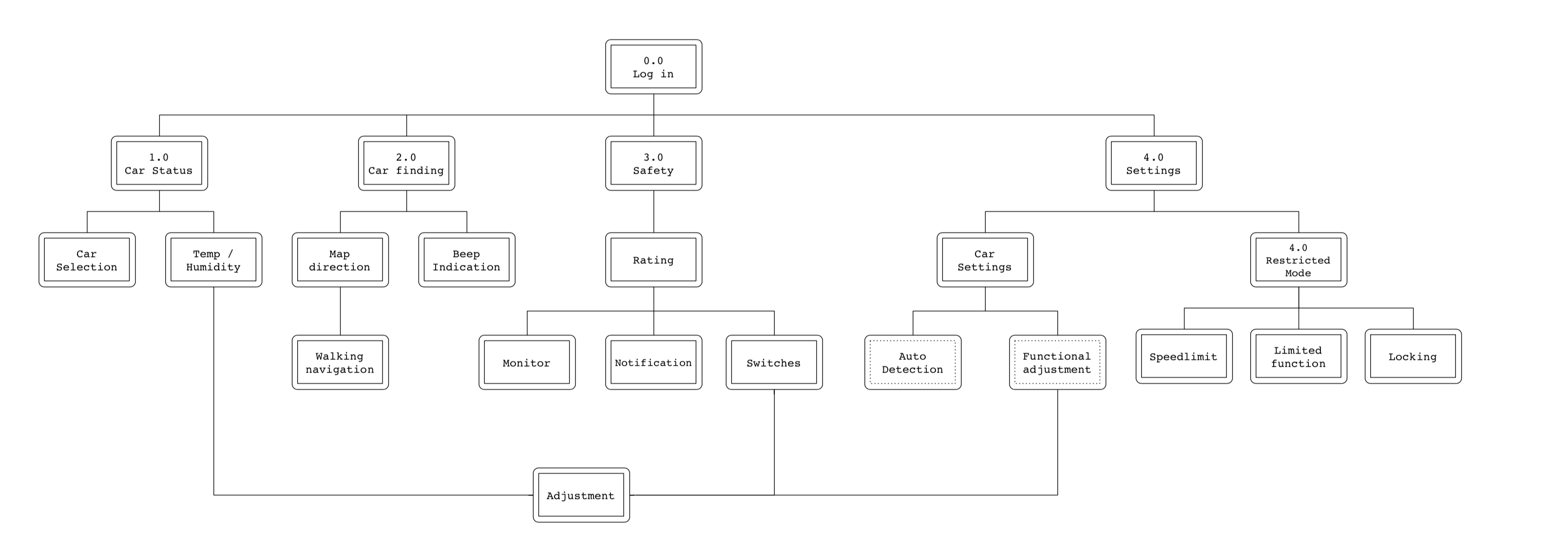

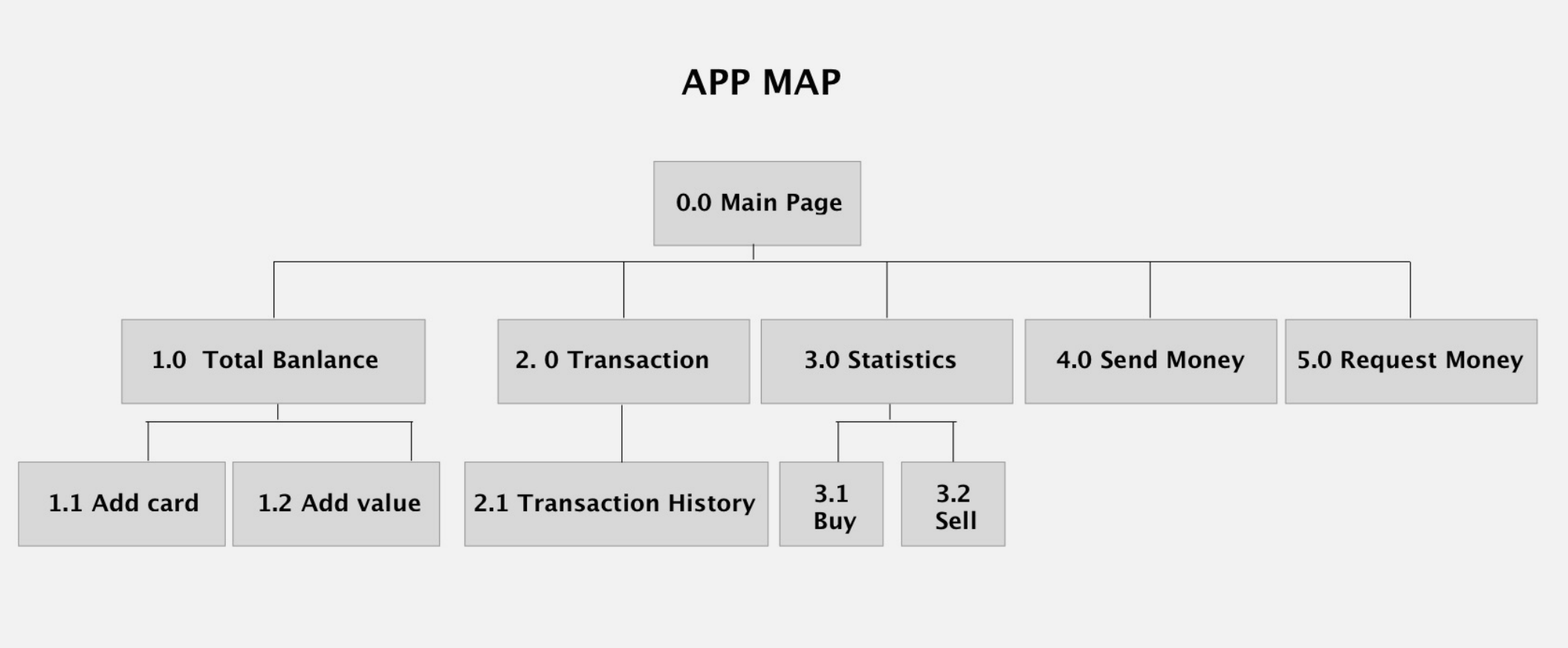

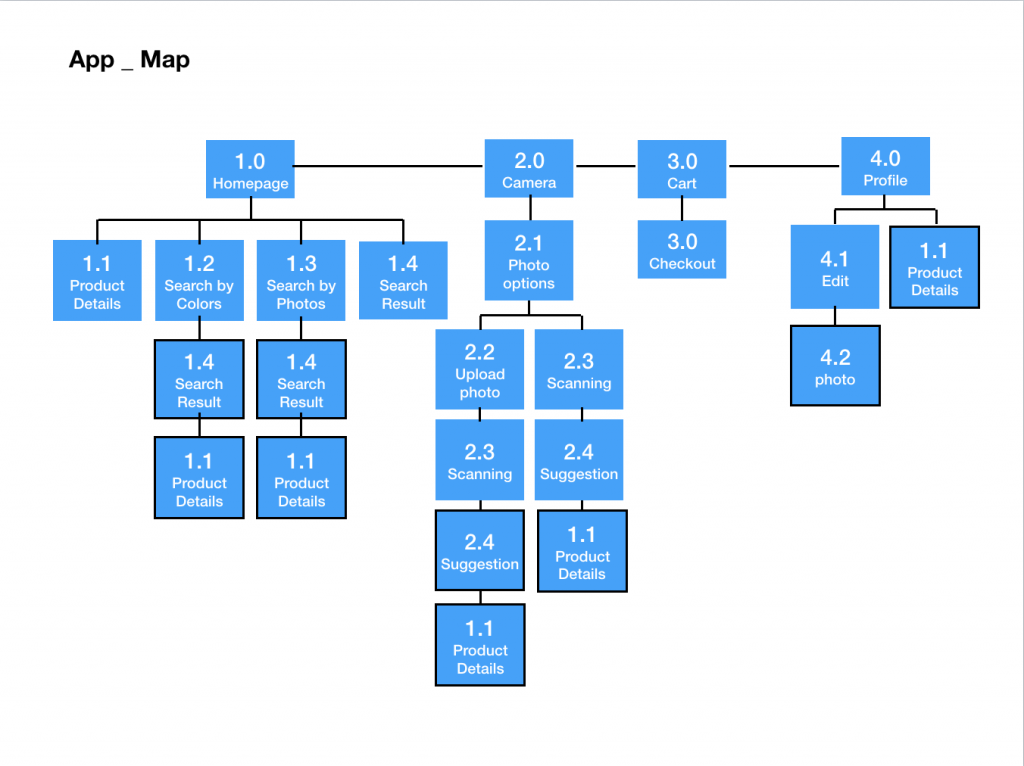

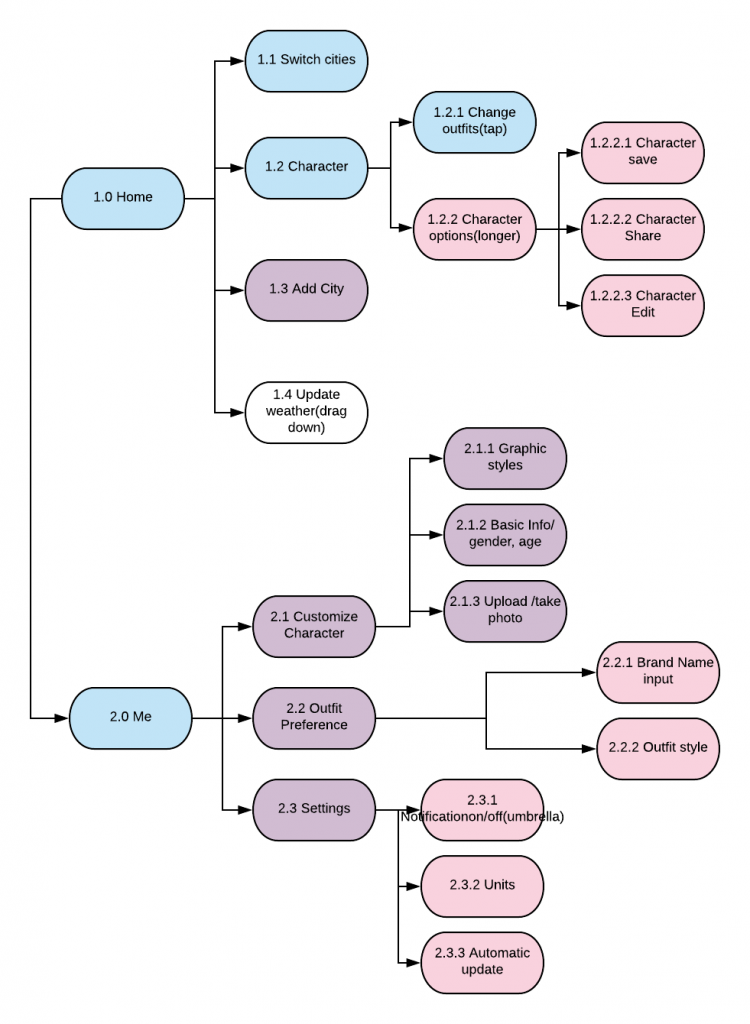

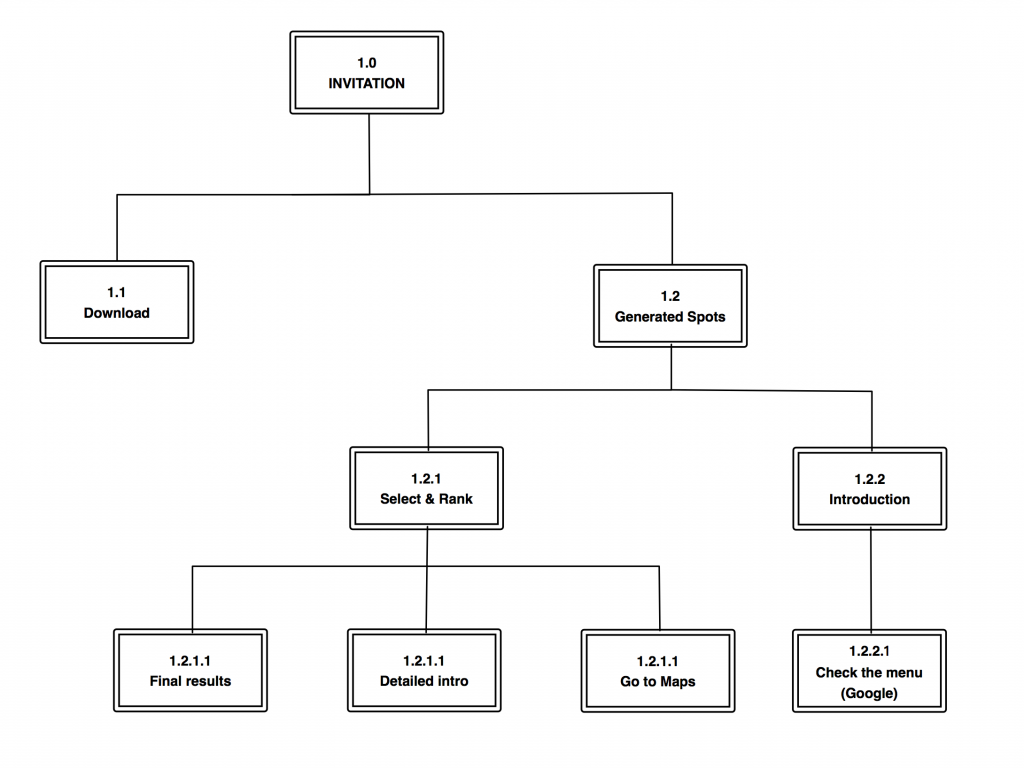

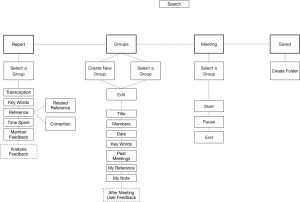

Iterated App Map

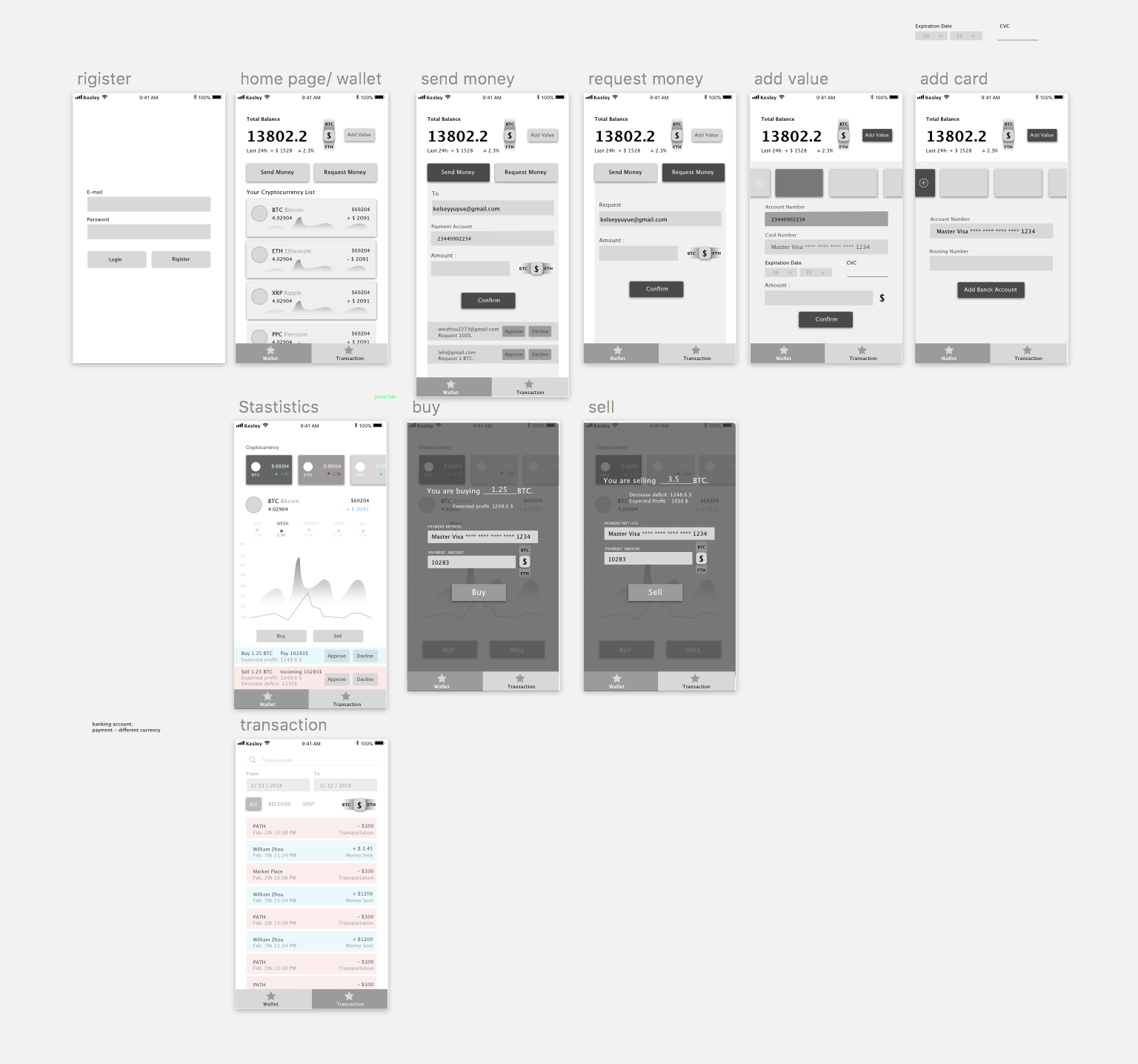

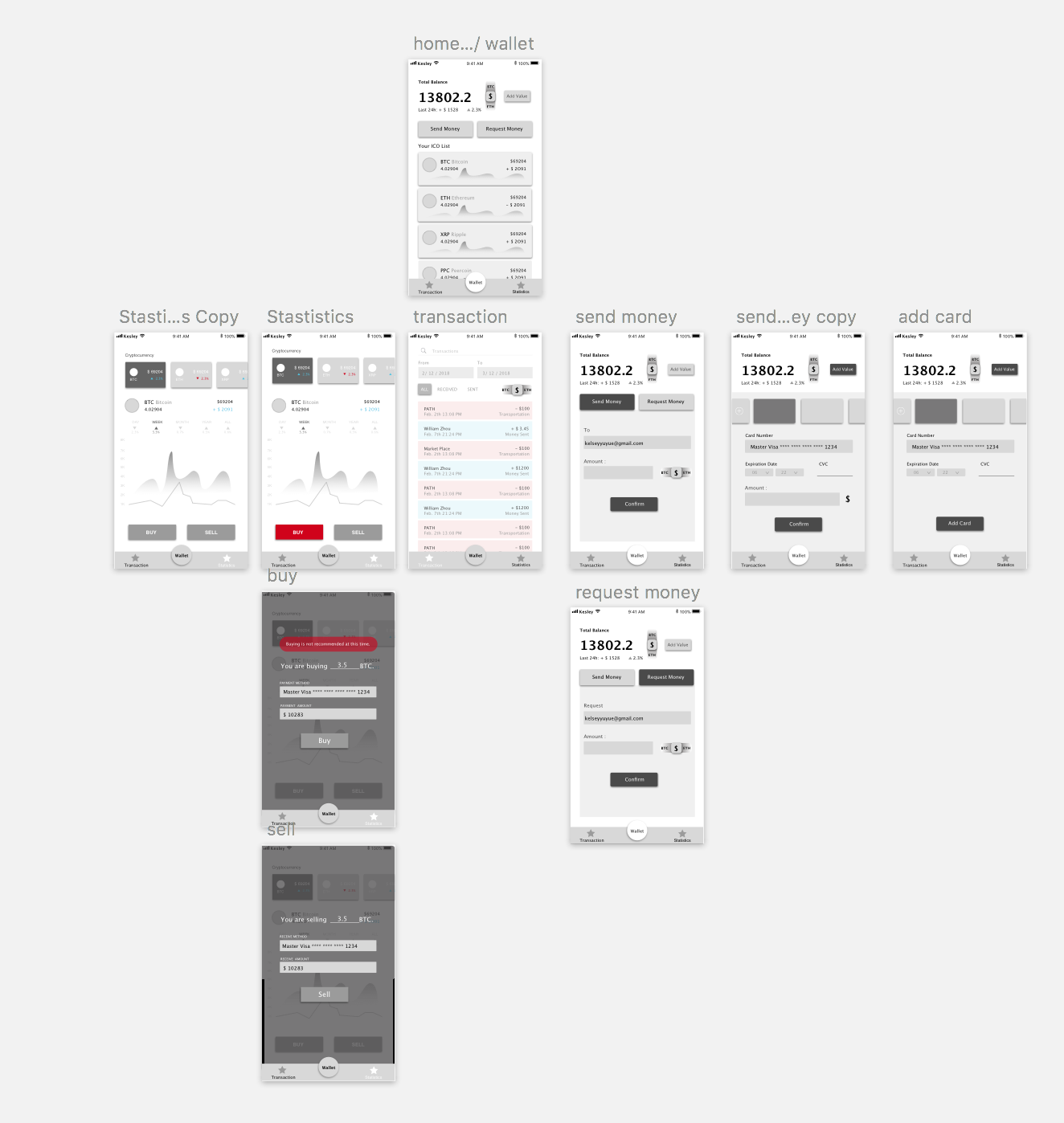

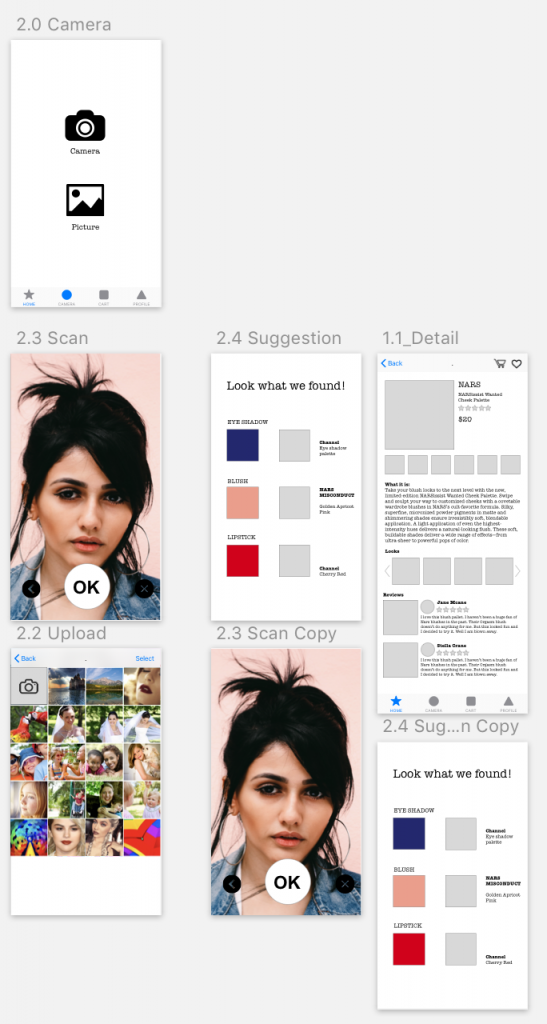

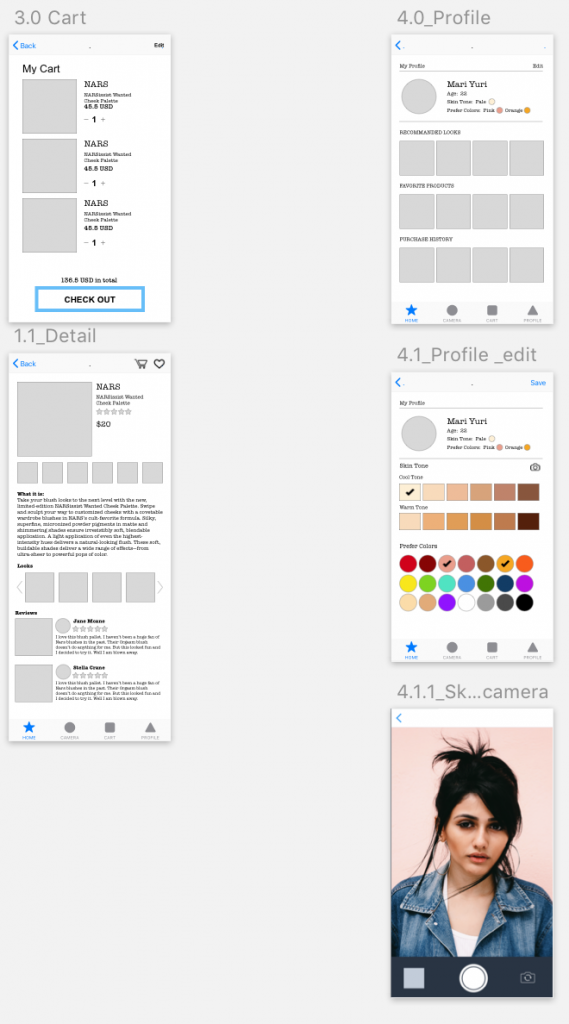

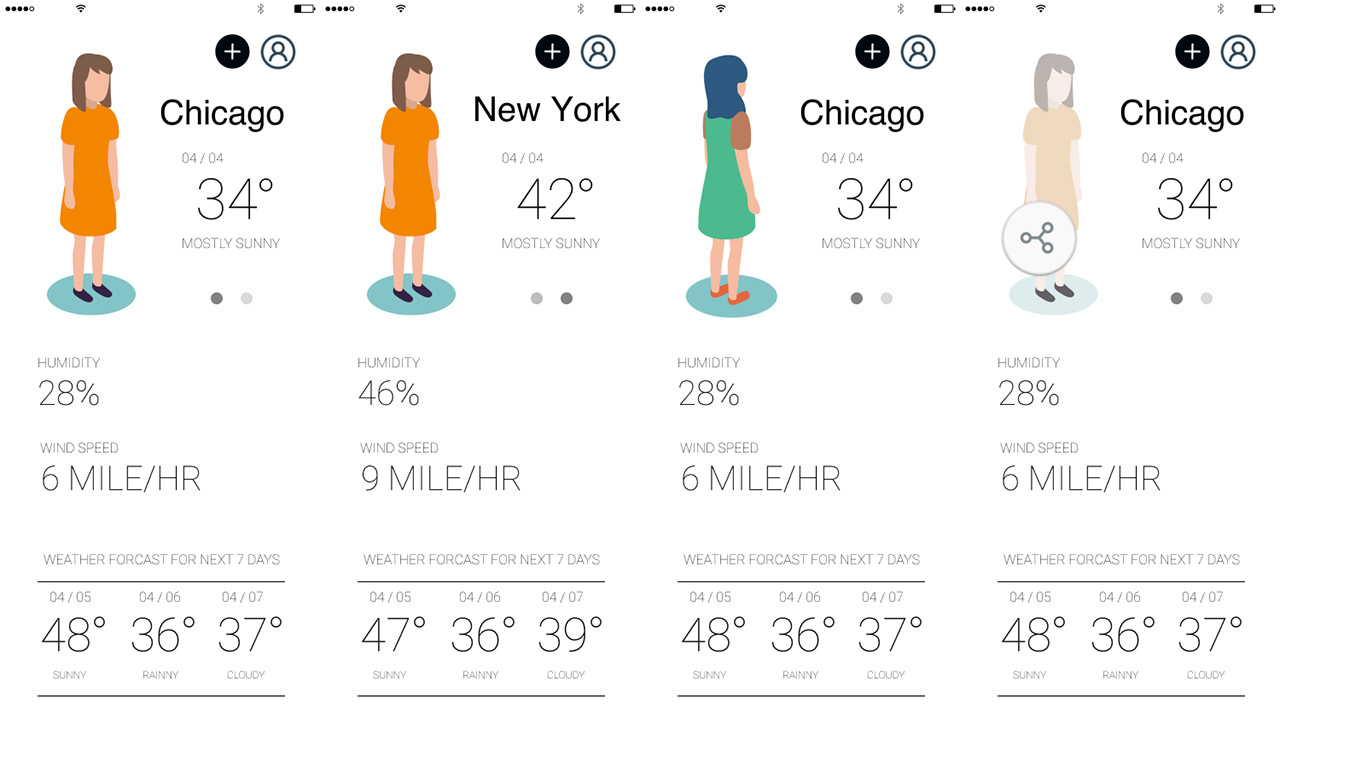

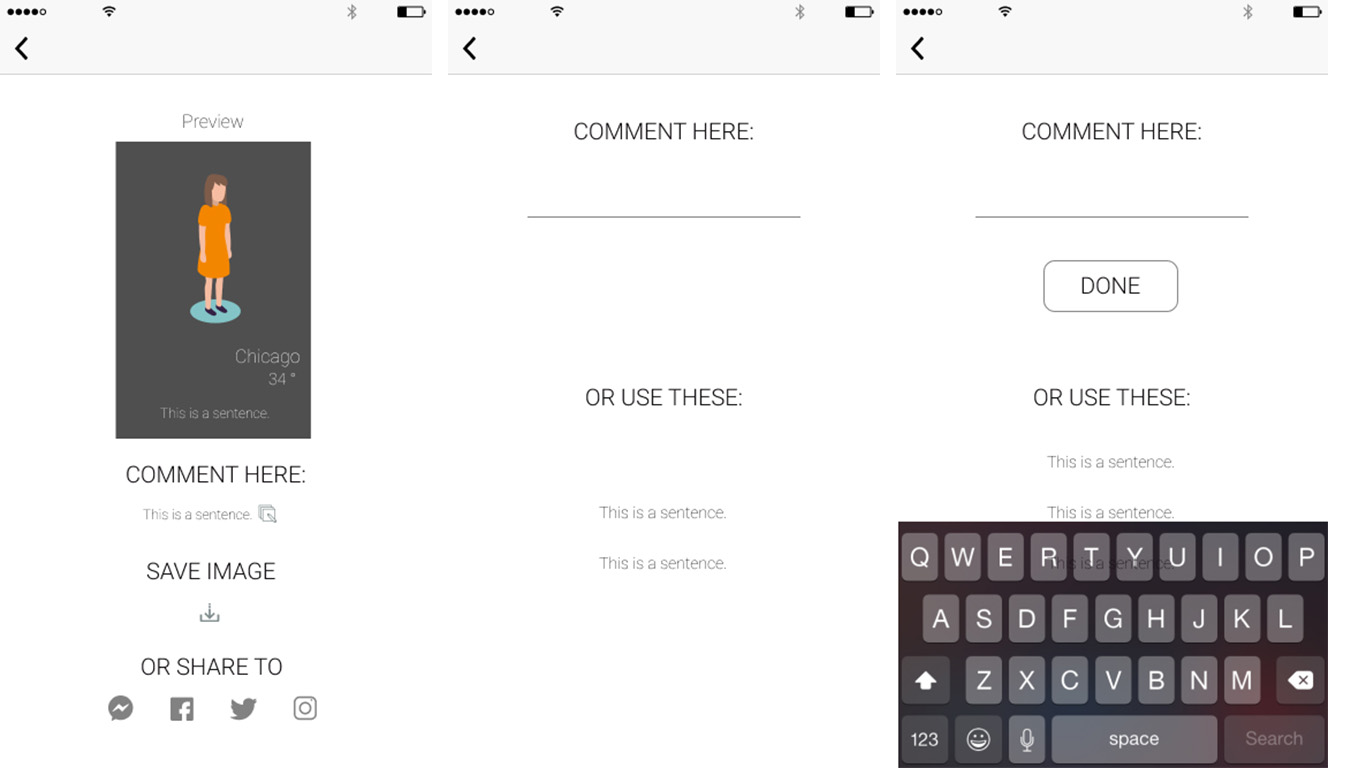

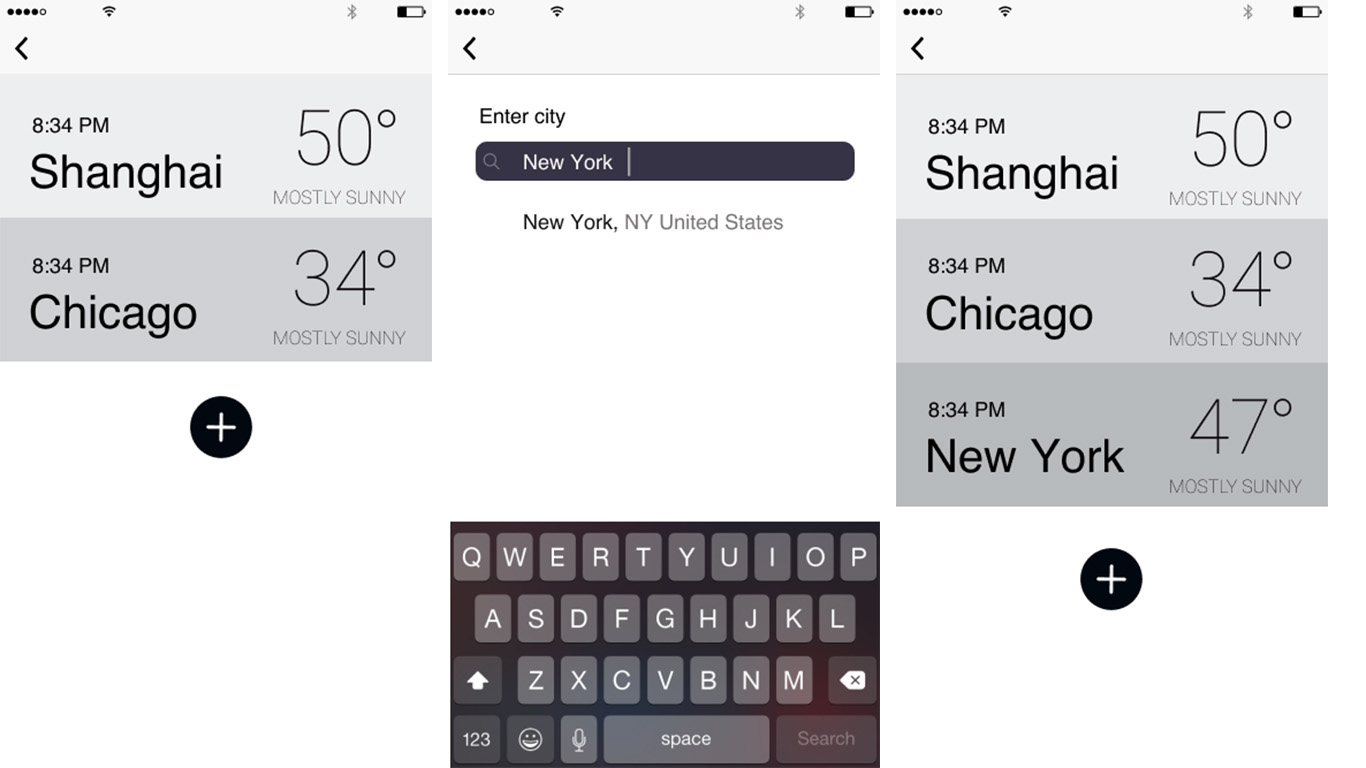

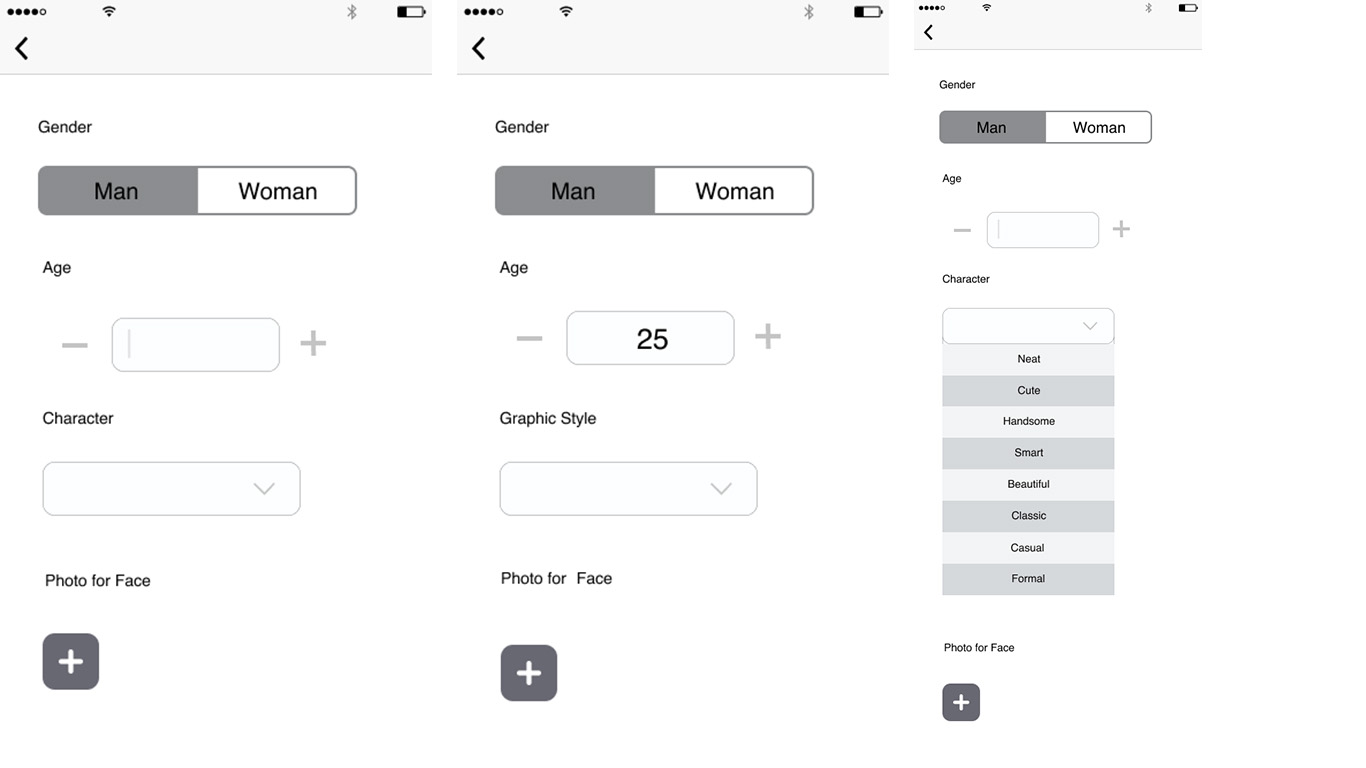

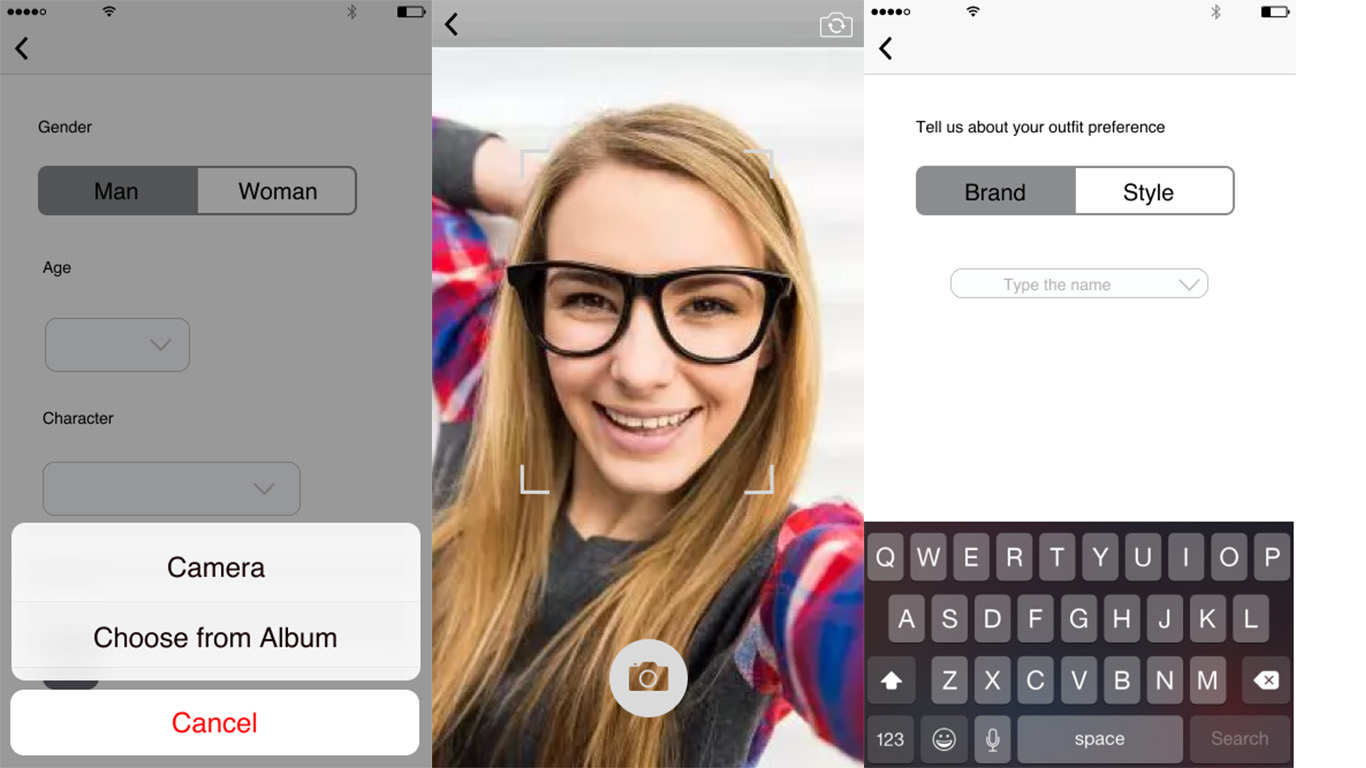

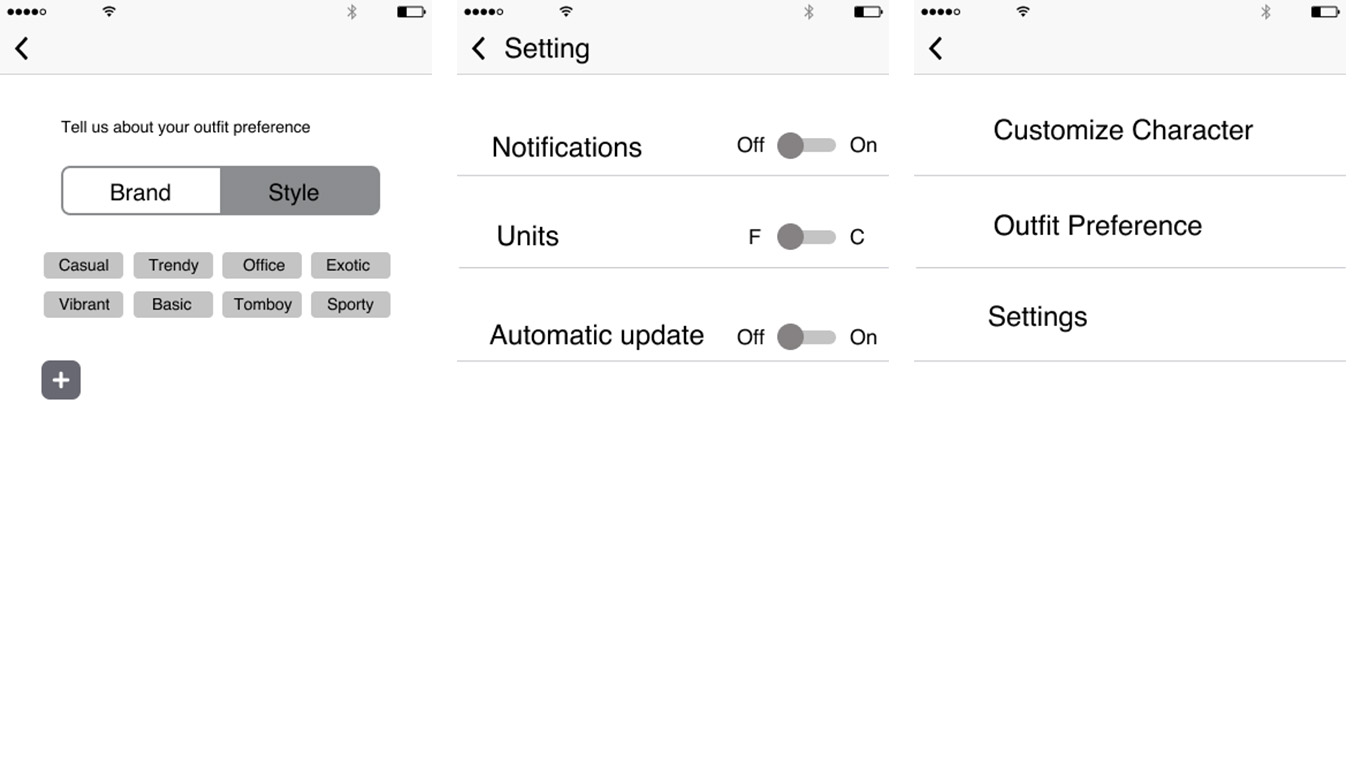

Iterated Wireframe